The AI configuration section in DataTalk enables users to seamlessly integrate AI capabilities into their projects, enhancing automation, decision-making, and data analysis. By leveraging AI, users can gain insights, automate repetitive tasks, analyze patterns, predict outcomes, or even provide intelligent responses to specific queries. This makes the AI module an invaluable tool for projects in various industries, such as manufacturing, monitoring, logistics, or data-driven decision-making.

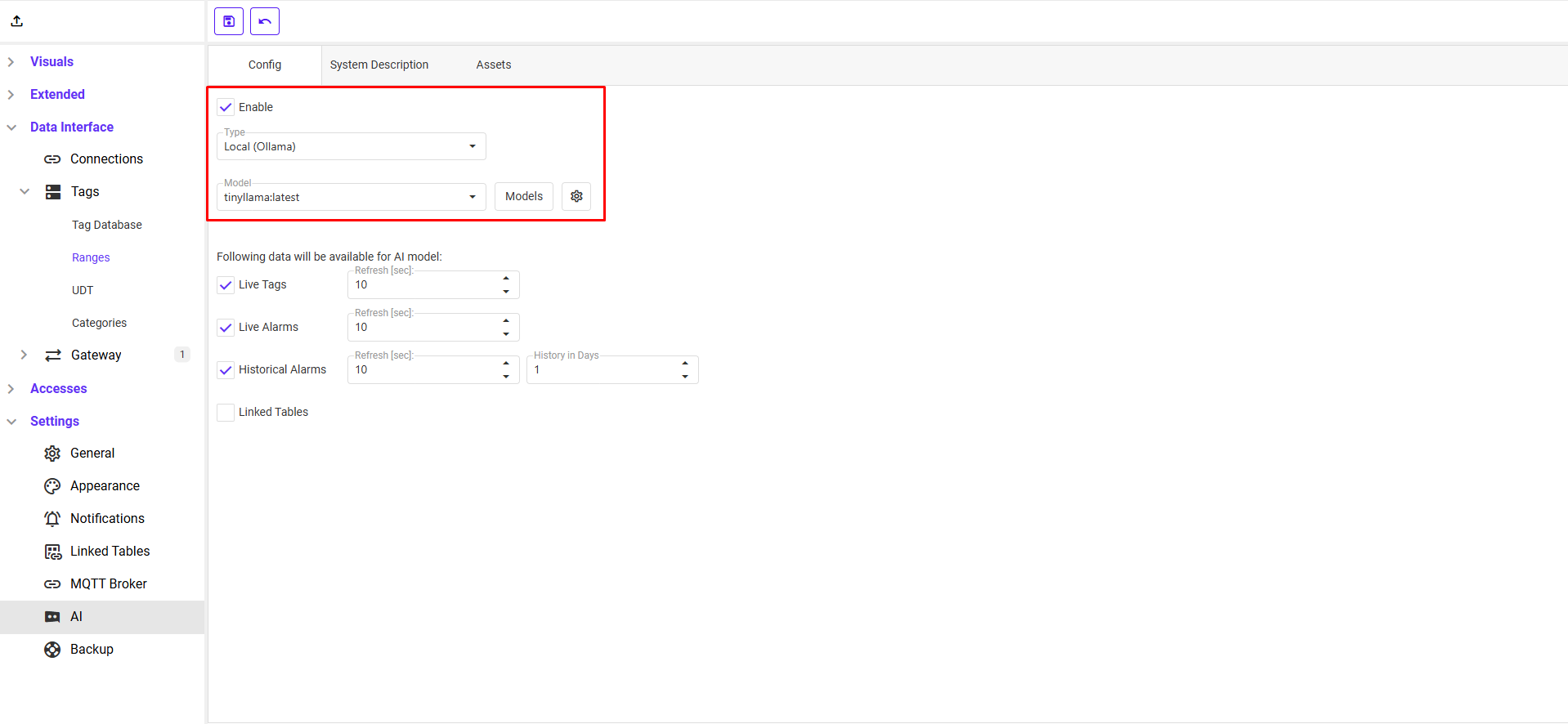

Step 1: Select AI Type and Model

Choose the desired AI type and then select the appropriate AI model. Depending on the model, you may need to provide an API key (e.g., for OpenAI’s API) or specify a device equipped with AI capabilities.

If you use a local (Ollama) hosting server for the AI model, keep in mind that the plugin HAS to be running, and you must import the model first. Please keep in mind that some AI models require more than 80 GB of storage.

The Ollama can be hosted locally using the DataTalk Manager integrated plugin manager.

Or you can install and host Ollama on another device and the city’s IP and port by using the button next to the Models button.

Also, keep in mind that according to the size of the AI model, it might take a couple of minutes for an AI model to start as well that the requests will be processed on the device when processed locally, keep in mind that it requires some CPU / RAM usage for it to work!

The selection of an OpenAI model not only determines the technological sophistication and complexity of responses but also directly impacts the number of credits deducted by the OpenAI API for each request.

Step 2: Configure Variables and Intervals

At the bottom of the section, define the variables the AI can utilize and set the intervals at which data is processed. This configuration can significantly impact the computational load or the consumption of API credits if external AI services like OpenAI are used.

Step 3: System Description

In the System Description field, provide a detailed overview of the project. Include its purpose, what it logs or monitors, and its industry. This comprehensive description ensures that the AI model understands the project clearly, enabling it to deliver more accurate and relevant responses.

Step 4: Upload Assets

The Assets section allows you to upload documents that the AI can reference. These may include technical manuals, equipment guides, or engineering drawings, enhancing the AI’s ability to provide contextually informed answers.

This structured setup empowers users to tailor AI functionality to their project’s unique requirements, maximizing efficiency and effectiveness.

Running AI Locally with the DataTalk Manager Plugin (Ollama)

Running AI models locally using the DataTalk Manager plugin, Ollama, requires high-performance hardware, preferably with a dedicated external GPU (Even the external GPU doesn’t mean the AI model will fit into its memory). Insufficient hardware resources may result in slow response times, decreased accuracy in chatbot answers, and prolonged startup times.

The initialization time of the AI chatbot depends on the model’s complexity and size, with some models requiring over 80 GB of storage. Additionally, specific models may have limited capabilities in languages other than English, potentially affecting communication quality.

It is recommended to run Ollama on an external server equipped with dedicated hardware for optimal performance. Processing AI requests locally without adequate hardware resources may degrade chatbot performance and significantly impact overall system responsiveness.

In case of insufficient HW, we recommend using OpenAI API to process the requests externally.

Please keep in mind that OpenAI requires a stable internet connection. It sends the requests to the OpenAI server to process them and then sends the answers back.

Recommended AI “light-weight” models capable of communicating both using EN / CS – sufficient HW required / optional!

- Gemma 3 – approx size 3,3GB (4b)

Gemma 3 is part of Google DeepMind’s Gemma model series, designed to be lightweight, efficient, and optimized for real-world applications. These models focus on high performance in AI-powered chatbots, text generation, and other NLP tasks. While specific details on Gemma 3’s architecture and size are not yet fully disclosed, previous versions featured:

Smaller model sizes (e.g., 2B, 7B) make them ideal for on-device or cloud-based AI solutions.

Strong alignment with Google’s AI ecosystem, allowing seamless integration with tools like Vertex AI.

Optimized for efficiency, balancing power with minimal resource consumption for AI deployment.

Gemma 3 is expected to refine Google’s approach to compact yet powerful AI models, making AI more accessible for developers and enterprises. - llama 3 – approx size 4,7GB (8b)

Llama 3 is the latest iteration in Meta’s Llama (Large Language Model Meta AI) series, designed to provide state-of-the-art AI language capabilities. While official details on Llama 3’s architecture and model sizes are still emerging, it is expected to feature:

Multiple parameter sizes, likely ranging from lightweight models (e.g., 7B, 13B) to large-scale versions (e.g., 65B+).

Improved efficiency and reasoning, enhancing both performance and usability for research and enterprise applications.

Optimized multilingual capabilities, extending its usability beyond English-focused applications.

Llama 3 is anticipated to push the boundaries of AI-driven text generation, reasoning, and coding tasks, making it a versatile tool for developers and businesses. - Mistral – approx. size 3,6GB (7b)

Mistral is a family of open-weight AI language models developed by Mistral AI. These models are designed for efficiency and strong reasoning capabilities, making them suitable for various natural language processing tasks. Mistral models come in different sizes, with the most well-known being:

Mistral 7B – A 7-billion parameter model optimized for performance and efficiency.

Mixtral (2x7B) – A mixture of expert models that selectively activates two out of eight 7B expert models per query, enhancing computational efficiency.

Mistral models are known for their speed, cost-effectiveness, and ability to generate high-quality text outputs while being open-source and adaptable.

Recommended AI “heavy/middle-weight” models capable of communicating using EN / CS – sufficient HW required!

Any model with higher amount of parameters (12B+) which are more sophisticated, but as well has higher demand on HW.

- Gemma3 – approx. size 17GB (27b)

Gemma 3 is an open-weight AI language model developed by Google DeepMind, designed for high efficiency and strong reasoning capabilities. It builds upon previous Gemma models, offering improved text generation, multilingual processing, and adaptability for various AI applications. Gemma 3 balances computational cost and performance, making it suitable for research, development, and enterprise use. - llama3.3 – approx. size 43GB (70b)

Llama 3.3 is a large-scale AI language model by Meta AI, optimized for complex reasoning, code generation, and multilingual tasks. As part of the Llama family, it improves upon its predecessors with enhanced efficiency, better text coherence, and a broader knowledge base. With 70 billion parameters, it is designed for advanced AI applications in research and business. - mistral-large – approx. size 73GB (123b)

Mistral Large is a high-performance AI model from Mistral AI, built for enterprise-level natural language processing. With 123 billion parameters, it competes with leading proprietary models, excelling in reasoning, code generation, and text comprehension. It offers state-of-the-art capabilities for AI-driven applications while maintaining an open-weight approach for adaptability and transparency.

Disclaimer: DataTalk is not responsible for the accuracy or correctness of the responses provided by the AI. The AI-generated answers should be interpreted with caution and not taken as absolute facts. Users are advised to verify critical information from reliable sources.